Big data experiments: new powers for Europol risk reinforcing police bias

Topic

Country/Region

11 February 2021

EU policing agency Europol could be given new powers to process vast quantities of personal data under proposals put forward by the European Commission in December. One objective is to train algorithms “for the development of tools” to be used by Europol and national law enforcement agencies, raising the risk of reinforcing racial and other biases.

Support our work: become a Friend of Statewatch from as little as £1/€1 per month.

New powers for Europol

A proposal to reinforce Europol’s legal basis was hurriedly published in December by the European Commission following pressure from the governments of France and Austria, where terrorist attacks in October and November killed seven people and left at least 20 wounded.

The proposal had been in the works for some time and aims to increase Europol’s powers in a number of ways: by facilitating the exchange of data with companies; easing the transfer of data to non-EU states; and giving Europol the remit to participate in investigations that only concern one EU member state (currently it is limited to investigations affecting two or more member states).

The proposal also seeks to give Europol the power to process ‘big data’, legalising existing practices that earned the agency a "formal admonishment" from the European Data Protection Supervisor in October last year, when an investigation found that Europol officials were unlawfully processing the personal data of vast numbers of people.

Europol already holds vast quantities of data – as shown in a recent presentation given by an agency official to the Council Working Party on Information Exchange (pdf), obtained by Statewatch, in 2020 the Europol Information System contained "1,300M+ objects" and "~250,000 suspects of serious crime and terrorism", and the new rules would see these numbers grow.

Europol recently produced an "action plan" in response to the EDPS’ ruling, although the agency has been criticised for its embrace of data-driven policing. Irish MEP Clare Daly has accused Europol of wanting to "use big data for intensive profiling, with AI and machine learning playing a significant role."

This much is evident from the new proposals. The primary goal of the new data-processing powers is to enhance the agency’s ability to assist member states "in processing large and complex datasets to support their criminal investigations with cross-border leads. This would include techniques of digital forensics to identify the necessary information and detect links with crimes and criminals in other Member States."

Law enforcement algorithms

However, the proposal would also allow these large datasets to be processed in the context of “research and innovation” projects, in particular those looking at “the development, training, testing and validation of algorithms for the development of tools.” This comes alongside a reinforced role in setting overall EU security research priorities, something that border agency Frontex has also recently taken on.

As with other new powers included in the proposal, the member states are fully supportive of the plans. A Council ‘wishlist’ published last November by Statewatch said:

"Europol must be capable of harnessing the potential of technological innovation… This includes the development and use of artificial intelligence for analysis and operational support. For this purpose, the EU Innovation Hub for Internal Security, located at Europol, must immediately begin its work and make technologies such as artificial intelligence and encryption a priority."

Europol already has some involvement in the development of new technologies. The agency currently participates in three EU-funded research projects (AIDA, GRACE and INFINITY), looking into the use of artificial intelligence, big data, machine learning and virtual and augmented reality for law enforcement purposes.

It is not clear what datasets are being used in these research projects, but making it possible for the agency’s own projects to use the data it has hoovered up from the member states and elsewhere raises the risk of reinforcing the biases that already exist in policing.

"Reflecting reality"

In November last year, Europol’s Deputy Director for Governance, Jürgen Ebner, told a high-level conference hosted by database agency eu-Lisa that to train law enforcement algorithms "you need to use data that is reflecting reality. You need to make use of operational data."

However, the "reality" that data reflects is a very particular one. A report published by the European Network Against Racism in 2019 highlighted "the overwhelming evidence of discriminatory policing against racialised minority and migrant communities across Europe," and warned that feeding new technological tools with existing police data "will result advertently in the ‘hardwiring’ of historical racist policing into present day police and law enforcement practice."

The proposal includes some new safeguards for "research and innovation" activities – for example, the Executive Director will have to make an assessment of any potential bias in the outcome of projects and ways to address it; and Europol will have to keep "a complete and detailed description of the process and rationale behind the training, testing and validation of algorithms to ensure transparency and for verification of the accuracy of the results."

Given the scale of the datasets under discussion (potentially involving millions of people) and their unique provenance (primarily coming from law enforcement agencies), it may prove difficult to accurately assess the extent of the bias involved and to find a suitable yardstick against which to measure the accuracy of the results.

While the proposal also notes the need to "ensure transparency", it is not clear whether this is referring to the algorithm itself, the research and testing methods, or the results of any research – and there is no indication of exactly how far such transparency will extend. Will the algorithms produced by Europol be open for anyone to inspect?

Algorithms and action plans

In the recent EU anti-racism Action Plan, the European Commission underscored that racist policing "can damage trust in the authorities and lead to other negative outcomes, such as underreporting of crimes and resistance to public authority."

At the same time, it is proposing that Europol develop new technologies using vast quantities of personal data gathered by the very institutions that engage in discriminatory practices. Will that really help meet the Action Plan’s goal of "doing more to tackle racism in everyday life"?

Further reading

- EU: New counter-terror plans and more powers for Europol put rights at risk (22 December 2020)

- EU: Police seeking new technologies as Europol's "Innovation Lab" takes shape (29 October 2020)

- The Shape of Things to Come- EU Future report (September 2008, pdf)

- The activities and development of Europol - towards an unaccountable "FBI" in Europe (January 2002, pdf)

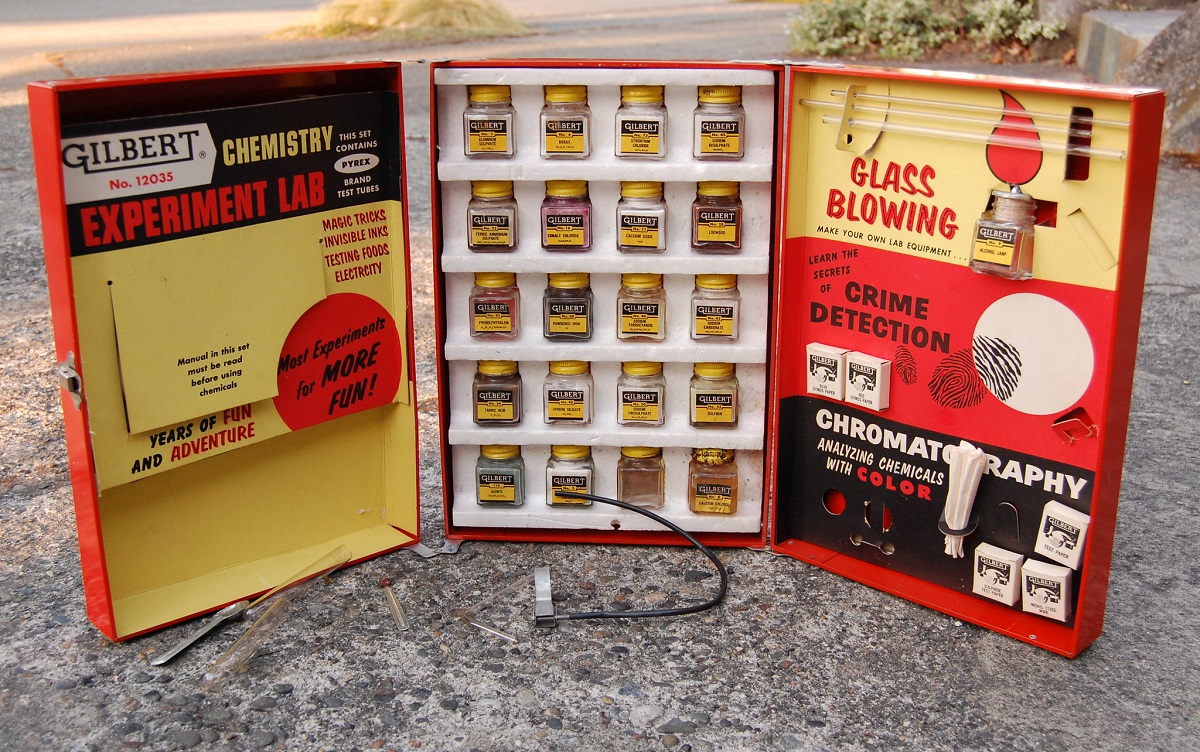

Image: steve lodefink, CC BY 2.0

Our work is only possible with your support.

Become a Friend of Statewatch from as little as £1/€1 per month.

Spotted an error? If you've spotted a problem with this page, just click once to let us know.