Police racism and criminalisation across Europe increasingly fuelled by digital 'prediction' and profiling systems

Topic

Country/Region

30 June 2025

London, 30 June 2025 – The civil liberties organisation Statewatch has published a report that reveals how police and criminal legal system authorities across Europe are using data-based, algorithmic and AI systems to ‘predict’ where crimes may occur and profile people as criminals, despite the EU’s apparent ban on so-called ‘predictive policing’ systems in the Artificial Intelligence Act.

Support our work: become a Friend of Statewatch from as little as £1/€1 per month.

Statewatch’s report (pdf) brings together and summarises in-depth and original research on these new technologies from partner organisations in Belgium, France, Germany, and Spain.

The report details how these so-called crime ‘prediction’ systems are increasingly embedded within and influencing police and criminal legal system authorities’ decision making and actions. This leads to people and communities being put under surveillance, subjected to questioning, identity checks and stop and search, home raids and even arrests.

These data-based, algorithmic and AI tools also affect decisions throughout the criminal legal system, from pre-trial detention to prosecution, sentencing to probation. Outside of the criminal legal system, these so called ‘predictions’ and profiles are leading to people being barred from employment, restrictions or denial of access to essential services, and even deportation.

The research also discusses and analyses the impact of these systems. It provides evidence on how marginalised groups and communities across Europe are disproportionately targeted and impacted by these systems, including Black and racialised people and communities, victims of gender-based violence, migrants, people from working-class and socio-economically deprived backgrounds and areas, and people with mental health issues.

These systems use historical data, for example from the police or criminal legal system. This reflects historic and existing biases within these institutions and within wider society. This leads to the over-policing and criminalisation of marginalised communities, particularly racialised groups, migrants, and people from low-income neighbourhoods.

The report argues that the use of these systems by police and criminal legal system authorities:

- leads to racial and socio-economic profiling, discrimination and criminalisation.

- has significant consequences for individuals’ rights, including the right to a fair trial, privacy, and freedom from discrimination.

- is deliberately secretive and opaque, meaning that people are not aware of their use. The lack of transparency surrounding the development, training and operational use of these systems is a fundamental bar to justice and accountability.

Statewatch calls for a prohibition on the use of crime ‘prediction’ and profiling systems, and for national and local legislatures to pass a legal prohibition against their use.

Griff Ferris, Researcher at Statewatch said:

“The concept of profiling and prediction has its roots in colonialism, and these new technologies are similarly being used to maintain and reinforce structural and institutional racism and violence by police and criminal legal systems.

“They are being used to provide authorities with the suspicion, the reasonable cause and the justification for police intervention: whether to monitor, stop and search, arrest – and even potentially deadly violence. The criminalisation of entire communities based on data and code has to stop. These systems must be banned.”

END

Notes to editors

Statewatch’s report, New Technology, Old Injustice: Data-driven discrimination and profiling in police and prisons in Europe (pdf) was produced in partnership with researchers working with Technopolice Belgium and La Ligue des droits humains in Belgium, La Quadrature du Net and Technopolice France in France, AlgorithmWatch in Germany and AlgoRace in Spain.

Statewatch produces and promotes critical research, policy analysis and investigative journalism to inform debates, movements and campaigns on civil liberties, human rights and democratic standards. We began operating in 1991 and are based in London.

For further information or to request an interview, please contact: comms@statewatch.org

Our work is only possible with your support.

Become a Friend of Statewatch from as little as £1/€1 per month.

Further reading

UK: Over 1,300 people profiled daily by Ministry of Justice AI system to ‘predict’ re-offending risk

Over 20 years ago, a system to assess prisoners’ risk of reoffending was rolled out in the criminal legal system across England and Wales. It now uses artificial intelligence techniques to profile thousands of offenders and alleged offenders every week. Despite serious concerns over racism and data inaccuracies, the system continues to influence decision-making on imprisonment and parole – and a new system is in the works.

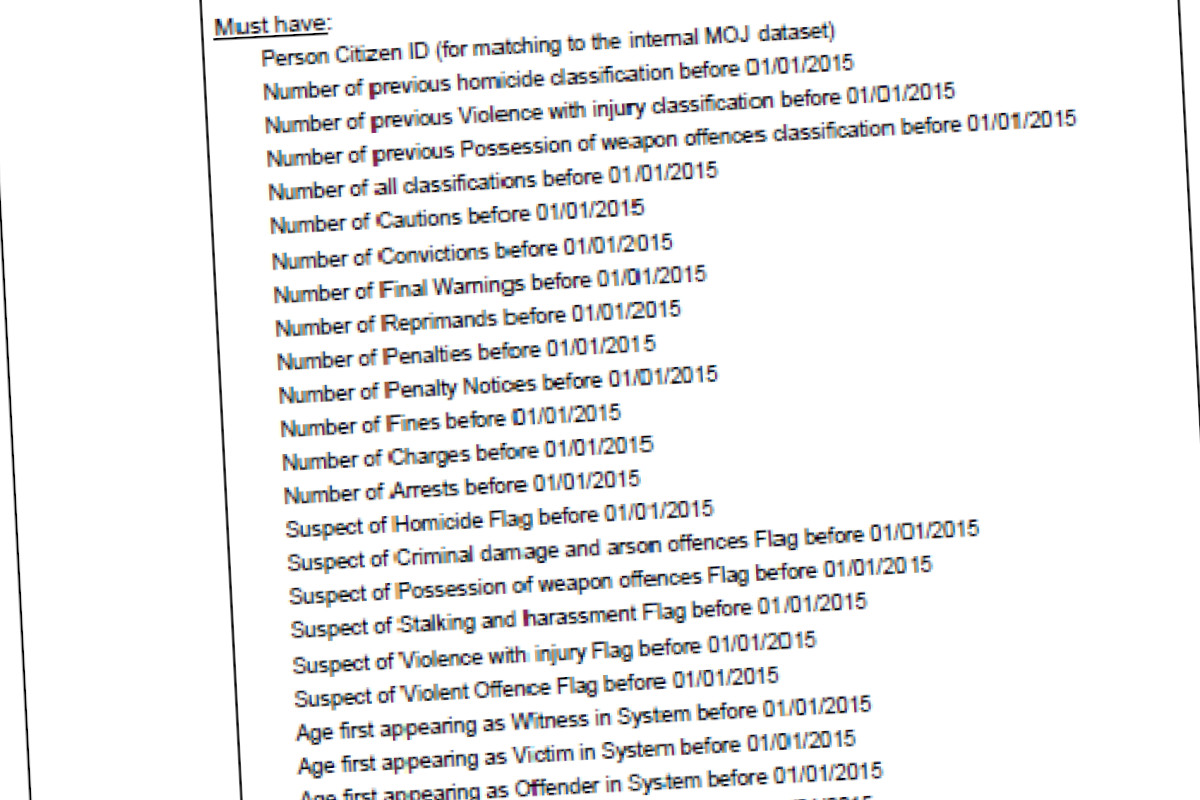

UK: Ministry of Justice secretly developing ‘murder prediction’ system

The Ministry of Justice is developing a system that aims to ‘predict’ who will commit murder, as part of a “data science” project using sensitive personal data on hundreds of thousands of people.

Belgium: New report calls for a ban on 'predictive' policing technologies

Following an investigation carried out over the past two years, Statewatch, the Ligue des droits humains and the Liga voor mensenrechten, jointly publish a report on the development of ‘predictive’ policing in Belgium. There are inherent risks in these systems, particularly when they rely on biased databases or sociodemographic statistics. The report calls for a ban on ‘predictive’ systems in law enforcement.

Spotted an error? If you've spotted a problem with this page, just click once to let us know.