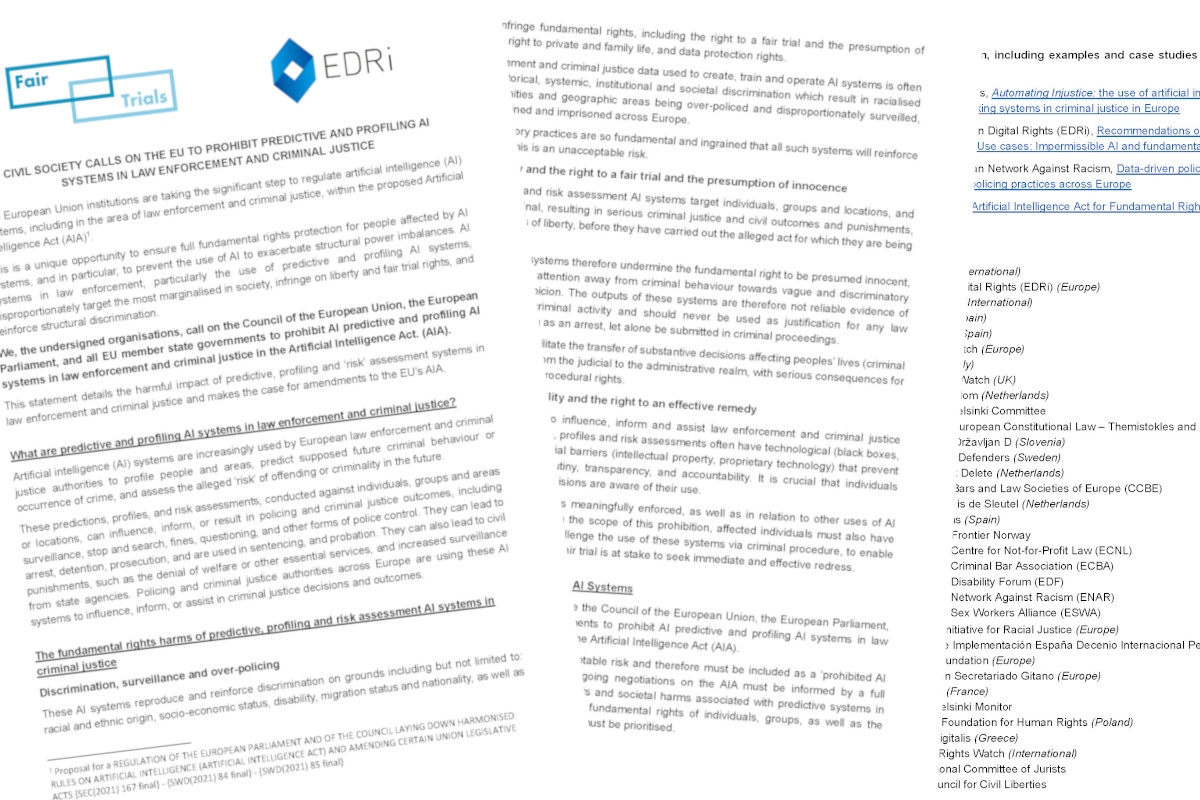

Civil society calls on the EU to prohibit predictive and profiling AI systems in law enforcement and criminal justice

Topic

Country/Region

01 March 2022

45 organisations, including Statewatch, are calling on EU decision-makers to prohibit the use of predictive and profiling "artificial intelligence" (AI) systems in the realm of law enforcement and criminal justice, a move that will "ensure full fundamental rights protection for people affected by AI systems, and in particular... prevent the use of AI to exacerbate structural power imbalances."

Support our work: become a Friend of Statewatch from as little as £1/€1 per month.

The statement was coordinated by Fair Trials and EDRi. Full-text below.

The European Union institutions are taking the significant step to regulate artificial intelligence (AI) systems, including in the area of law enforcement and criminal justice, within the proposed Artificial Intelligence Act (AIA).

This is a unique opportunity to ensure full fundamental rights protection for people affected by AI systems, and in particular, to prevent the use of AI to exacerbate structural power imbalances. AI systems in law enforcement, particularly the use of predictive and profiling AI systems, disproportionately target the most marginalised in society, infringe on liberty and fair trial rights, and reinforce structural discrimination.

We, the undersigned organisations, call on the Council of the European Union, the European Parliament, and all EU member state governments to prohibit AI predictive and profiling AI systems in law enforcement and criminal justice in the Artificial Intelligence Act. (AIA).

This statement details the harmful impact of predictive, profiling and ‘risk’ assessment systems in law enforcement and criminal justice and makes the case for amendments to the EU’s AIA.

What are predictive and profiling AI systems in law enforcement and criminal justice?

Artificial intelligence (AI) systems are increasingly used by European law enforcement and criminal justice authorities to profile people and areas, predict supposed future criminal behaviour or occurrence of crime, and assess the alleged ‘risk’ of offending or criminality in the future.

These predictions, profiles, and risk assessments, conducted against individuals, groups and areas or locations, can influence, inform, or result in policing and criminal justice outcomes, including surveillance, stop and search, fines, questioning, and other forms of police control. They can lead to arrest, detention, prosecution, and are used in sentencing, and probation. They can also lead to civil punishments, such as the denial of welfare or other essential services, and increased surveillance from state agencies. Policing and criminal justice authorities across Europe are using these AI systems to influence, inform, or assist in criminal justice decisions and outcomes.

The fundamental rights harms of predictive, profiling and risk assessment AI systems in criminal justice

Discrimination, surveillance and over-policing

These AI systems reproduce and reinforce discrimination on grounds including but not limited to: racial and ethnic origin, socio-economic status, disability, migration status and nationality, as well as engage and infringe fundamental rights, including the right to a fair trial and the presumption of innocence, the right to private and family life, and data protection rights.

The law enforcement and criminal justice data used to create, train and operate AI systems is often reflective of historical, systemic, institutional and societal discrimination which result in racialised people, communities and geographic areas being over-policed and disproportionately surveilled, questioned, detained and imprisoned across Europe.

These discriminatory practices are so fundamental and ingrained that all such systems will reinforce such outcomes. This is an unacceptable risk.

The right to liberty and the right to a fair trial and the presumption of innocence

Predictive, profiling and risk assessment AI systems target individuals, groups and locations, and profile them as criminal, resulting in serious criminal justice and civil outcomes and punishments, including deprivations of liberty, before they have carried out the alleged act for which they are being profiled.

By their nature, these systems therefore undermine the fundamental right to be presumed innocent, shifting criminal justice attention away from criminal behaviour towards vague and discriminatory notions of risk and suspicion. The outputs of these systems are therefore not reliable evidence of actual or prospective criminal activity and should never be used as justification for any law enforcement action, such as an arrest, let alone be submitted in criminal proceedings.

Further, such systems facilitate the transfer of substantive decisions affecting peoples’ lives (criminal justice, child protection) from the judicial to the administrative realm, with serious consequences for fair trial, liberty and other procedural rights.

Transparency, accountability and the right to an effective remedy

AI systems that are used to influence, inform and assist law enforcement and criminal justice decisions through predictions, profiles and risk assessments often have technological (black boxes, neural networks) or commercial barriers (intellectual property, proprietary technology) that prevent effective and meaningful scrutiny, transparency, and accountability. It is crucial that individuals affected by these systems’ decisions are aware of their use.

To ensure that the prohibition is meaningfully enforced, as well as in relation to other uses of AI systems which do not fall within the scope of this prohibition, affected individuals must also have clear and effective routes to challenge the use of these systems via criminal procedure, to enable those whose liberty or right to a fair trial is at stake to seek immediate and effective redress.

Prohibit Predictive and Profiling AI Systems

The undersigned organisations urge the Council of the European Union, the European Parliament, and all EU member state governments to prohibit AI predictive and profiling AI systems in law enforcement and criminal justice in the Artificial Intelligence Act (AIA).

Such systems amount to an unacceptable risk and therefore must be included as a ‘prohibited AI practice’ in Article 5 of the AIA. Ongoing negotiations on the AIA must be informed by a full consideration of the fundamental rights and societal harms associated with predictive systems in policing and criminal justice, and the fundamental rights of individuals, groups, as well as the consequences for democratic society, must be prioritised.

For further information, including examples and case studies and further analysis, please see:

- Fair Trials, Automating Injustice: the use of artificial intelligence and automated deci- sion-making systems in criminal justice in Europe

- European Digital Rights (EDRi), Recommendations on the EU’s Artificial Intelligence Act and Use cases: Impermissible AI and fundamental rights breaches

- European Network Against Racism, Data-driven policing: the hard-wiring of discriminatory policing practices across Europe

- An EU Artificial Intelligence Act for Fundamental Rights – A Civil Society Statement

SIGNED

- Fair Trials (International)

- European Digital Rights (EDRi) (Europe)

- Access Now (International)

- AlgoRace (Spain)

- AlgoRights (Spain)

- AlgorithmWatch (Europe)

- Antigone (Italy)

- Big Brother Watch (UK)

- Bits of Freedom (Netherlands)

- Bulgarian Helsinki Committee

- Centre for European Constitutional Law – Themistokles and Dimitris Tsatsos

- Citizen D / Državljan D (Slovenia)

- Civil Rights Defenders (Sweden)

- Controle Alt Delete (Netherlands)

- Council of Bars and Law Societies of Europe (CCBE)

- De Moeder is de Sleutel (Netherlands)

- Digital Fems (Spain)

- Electronic Frontier Norway

- European Centre for Not-for-Profit Law (ECNL)

- European Criminal Bar Association (ECBA)

- European Disability Forum (EDF)

- European Network Against Racism (ENAR)

- European Sex Workers Alliance (ESWA)

- Equinox Initiative for Racial Justice (Europe)

- Equipo de Implementación España Decenio Internacional Personas Afrodescendientes

- Eticas Foundation (Europe)

- Fundación Secretariado Gitano (Europe)

- Ghett’Up (France)

- Greek Helsinki Monitor

- Helsinki Foundation for Human Rights (Poland)

- Homo Digitalis (Greece)

- Human Rights Watch (International)

- International Committee of Jurists

- Irish Council for Civil Liberties

- Iuridicum Remedium (IuRe) (Czech Republic)

- Ligue des Droits Humains (Belgium)

- Novact (Spain)

- Observatorio de Derechos Humanos y Empresas en la Mediterránea (ODHE)

- Open Society European Policy Institute

- Panoptykon Foundation (Poland)

- PICUM (Europe)

- Refugee Law Lab (Canada)

- Rights International Spain

- Statewatch (Europe)

- ZARA – Zivilcourage und Anti-Rassismus-Arbeit (Austria)

Our work is only possible with your support.

Become a Friend of Statewatch from as little as £1/€1 per month.

Previous article

Frontex to boost border control efforts in Niger, Algeria and Libya

Next article

Spotted an error? If you've spotted a problem with this page, just click once to let us know.